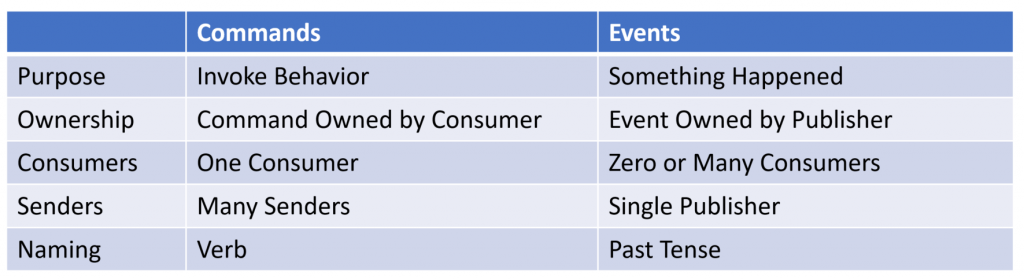

One of the building blocks of messaging is, you guessed it, messages! But there are different kinds of messages: Commands and Events. So, what’s the difference? Well, they have very distinct purposes, usage, naming, ownership, and more!

Commands

The purpose of commands is the intent to invoke behavior. When you want something to happen within your system, you send a command. There is some type of capability your service provides, and you need a way to expose that. That’s done through a command.

I didn’t mention CRUD. While you can expose Create, Update, and Delete operations through commands, I’m more referring to specific behaviors you want to invoke within your service. Let CRUD just be CRUD.

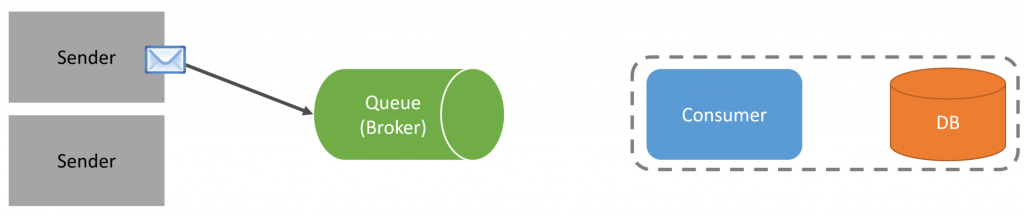

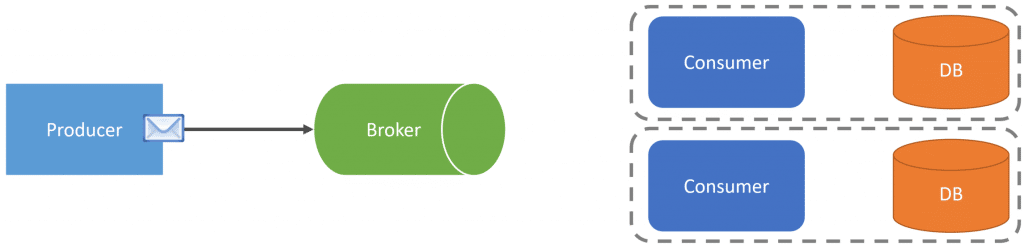

Commands have two parts. The first is the actual message (the command), which is the request and intent to invoke the behavior. The second is consumer/handler for that command which is performing and executing the behavior requested.

Commands have only a single consumer/handler that resides in the same logical boundary that defines and owns the schema and definition command.

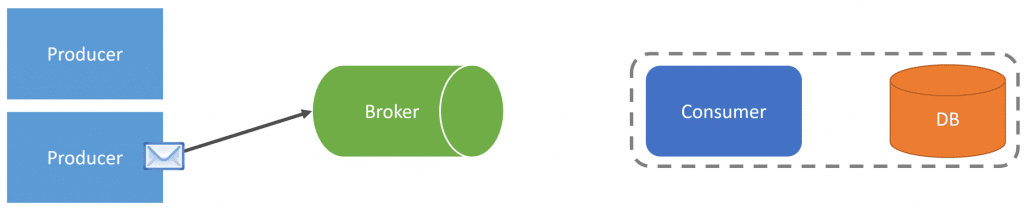

Commands can be sent from many different logical boundaries. There can be many different senders.

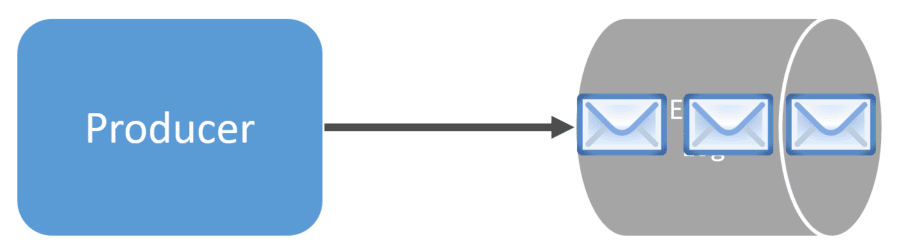

To illustrate this, the diagram below has many different senders, which can be different logical boundaries. The command (message) is being sent to a queue to decouple the sender and consumer.

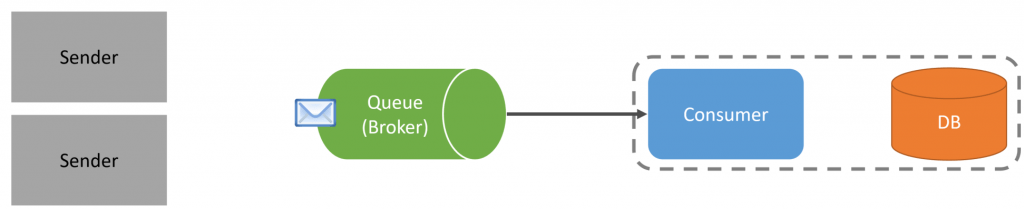

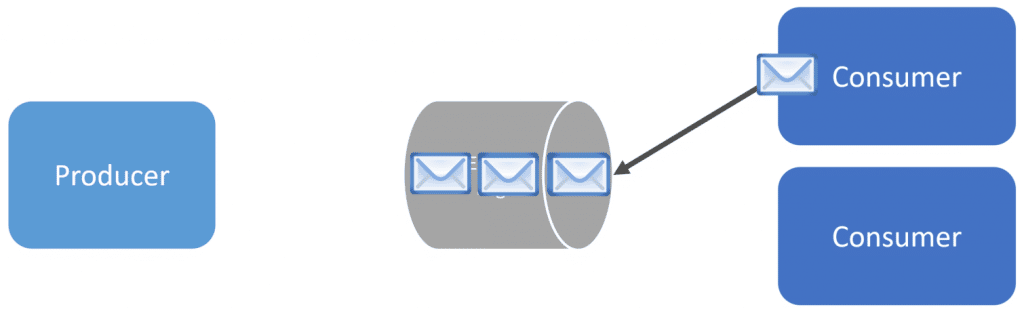

A single consumer/handler, that owns the command, will receive/pull the message from the queue.

When processing the message, it may interact with its database, as an example.

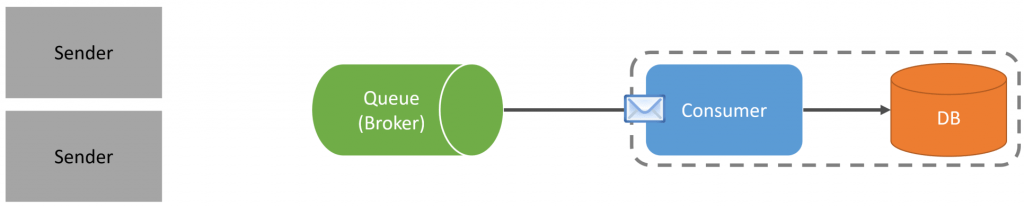

As mentioned, there can be many senders, so we could have a completely different logical boundary also sending the same command to the queue, which will be processed the same way by the consumer/handler.

Lastly, naming is important. Since a command is the intent to invoke behavior, you want to represent it by a verb and often a noun. Examples are PlaceOrder, ReceiveShipment, AdjustInventory, and InvoiceCustomer. Again, notice I’m not calling these commands CreateOrder, UpdateProduct, etc. These are specific behaviors that are related to actual business concepts within a domain.

Events

Events are about telling other parts of your system about the fact that something occurred within a service boundary. Something happened. Generally, an event can be the result of the completion of a command.

Events have two parts. The first is the actual message (the event), which is the notification that something occurred. The second is the consumer/handler for that event which is going to react and execute something based on that event occurring.

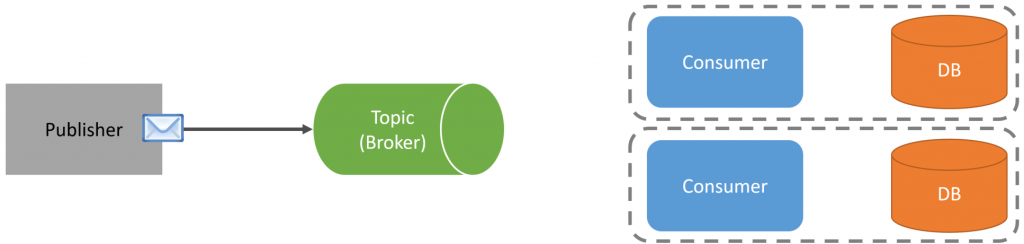

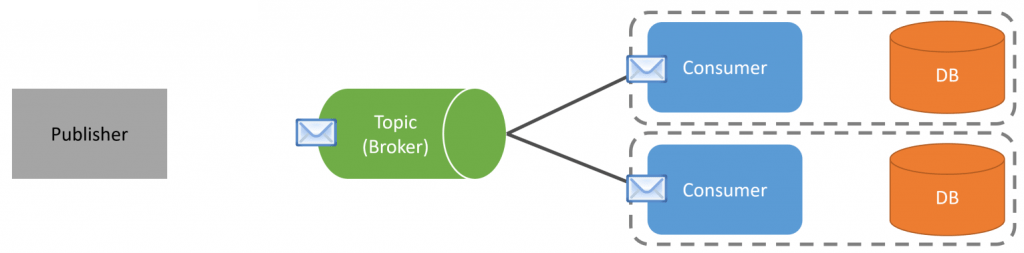

There is only one logical boundary that owns the schema and publishes an event.

Event consumers can live within many different logical boundaries. There may not be a consumer for an event at all. Meaning there can be zero or many different consumers.

To illustrate, the single publisher that owns the event will create and publish it to a Topic on a Message Broker.

That event will then be received by both consumers. Each consumer will receive a copy of the event and be able to execute independently in isolation from each other. This means that if one consumer fails, it will not affect the other.

Naming is important. Events are facts that something happened. They should be named in the past tense which reflects what occurred. Examples are OrderPlaced, ShipmentReceived, InventoryAdjusted, and PaymentProcessed. These are the result of specific business concepts.

This article originally appeared on codeopinion.com. To read the full article, click here.

Do you want to use Kafka? Or do you need a message broker and queues? While they can seem similar, they have different purposes. I’m going to explain the differences, so you don’t try to brute force patterns and concepts in Kafka that are better used with a message broker.

Partitioned Log

Kafka is a log. Specifically, a partitioned log. I’ll discuss the partition part later in this post and how that affects ordered processing and concurrency.

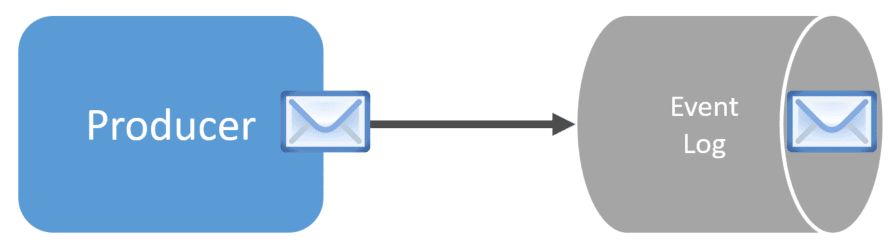

When a producer publishes new messages, generally events, to a log (a topic with Kafka), it appends them.

Events aren’t removed from a topic unless defined by the retention period. You could keep all events forever or purge them after a period of time. This is an important aspect to note in comparison to a queue.

With an event-driven architecture, you can have one service publish events and have many different services consuming those events. It’s about decoupling. The publishing service doesn’t know who is consuming, or if anyone is consuming, the events it’s publishing.

In this example, we have a topic with three events. Each consumer works independently, processing messages from the topic.

Because events are not removed from the topic, a new consumer could start consuming the first event on the topic. Kafka maintains an offset per topic, per consumer group, and partition. I’ll get to consumer groups and partitions shortly. This allows consumers to process new events that are appended to the topic. However, this also allows existing consumers to re-process existing messages by changing the offset.

Just because a consumer processes an event from a topic does not mean that they cannot process it again or that another consumer can’t consume it. The event is not removed from the topic when it’s consumed.

Commands & Events

A lot of the trouble I see with using Kafka revolves around applying various patterns or semantics typical with queues or a message broker and trying to force it with Kafka. An example of this is Commands.

There are two kinds of messages. Commands and Events. Some will say Queries are also messages, but I disagree in the context of asynchronous messaging.

Commands are about invoking behavior. There can be many producers of a command. There is a required single consumer of a command. The consumer will be within the logical boundary that owns the definition/schema of the command.

Events are about notifying other parts of your system that something has occurred. There is only a single publisher of an event. The logical boundary that publishes an event owns the schema/definition. There may be many consumers of an event or none.

Commands and events have different semantics. They have very different purposes, and how that also pertains to coupling.

By this definition, how can you publish a command to a Kafka topic and guarantee that only a single consumer will process it? You can’t.

Queue

This is where a queue and a message broker differ.

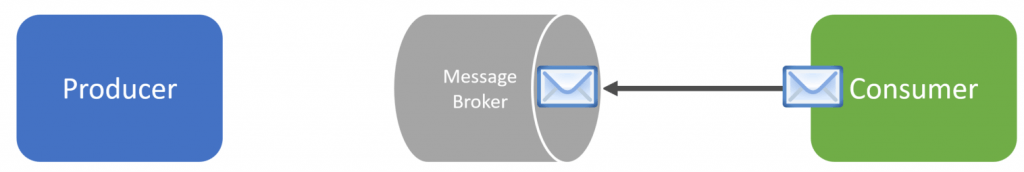

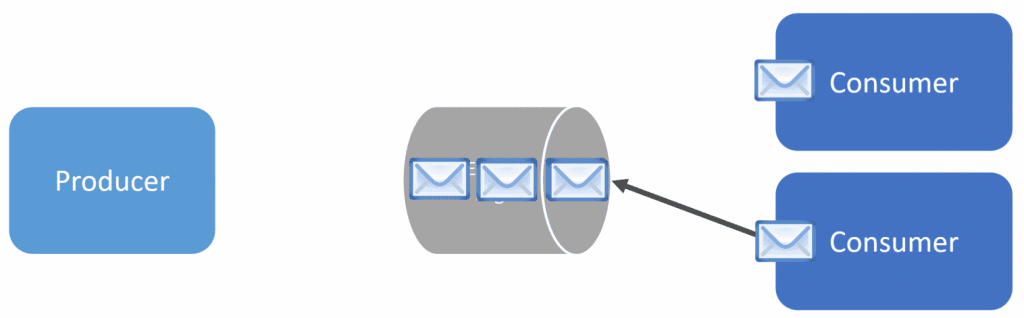

When you send a command to a queue, there’s going to be a single consumer that will process that message.

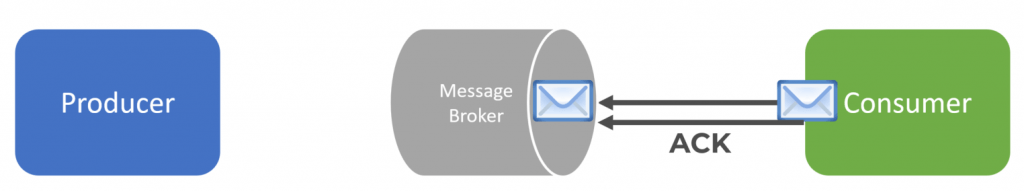

When the consumer finishes processing the message, it will acknowledge back to the broker.

At this point, the broker will remove the message from the queue.

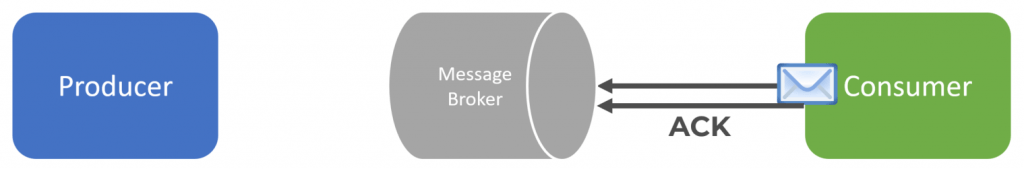

The message is gone. The consumer cannot consume it again, nor can any other consumer.

This article originally appeared on codeopinion.com. To read the full article, click here.

Many people wonder what the difference is between monitoring vs. observability. While monitoring is simply watching a system, observability means truly understanding a system’s state. DevOps teams leverage observability to debug their applications or troubleshoot the root cause of system issues. Peak visibility is achieved by analyzing the three pillars of observability: Logs, metrics and traces.

Depending on who you ask, some use MELT as the four pillars of essential telemetry data (or metrics, events, logs and traces) but we’ll stick with the three core pillars for this piece.

Metrics

Metrics are a numerical representation of data that are measured over a certain period of time, often leveraging a time-series database. DevOps teams can use predictions and mathematical modeling on their metrics to understand what is happening within their systems — in the past, currently and in the future.

The numbers within metrics are optimized to be stored for longer periods of time, and as a result, can be easily queried. Many teams build dashboards out of their metrics to visualize what is happening with their systems or use them to trigger real time alerts when something goes wrong.

Traces

Traces help DevOps teams get a picture of how applications are interacting with the resources they consume. Many teams that use microservices-based architectures rely heavily on distributed tracing to understand when failures or performance issues occur.

Software engineers sometimes set up request tracing by using instrumentation code to track and troubleshoot certain behaviors within their application’s code. In distributed software architectures like microservices-based environments, distributed tracing can follow requests through each isolated module or service.

Logs

Logs are perhaps the most critical and difficult to manage piece of the observability puzzle when you’re using traditional, one-size-fits-all observability tools. Also, logs are machine-generated data generated from the applications, cloud services, endpoint devices, and network infrastructure that make up modern enterprise IT environments.

While logs are simple to aggregate, storing and analyzing them using traditional tools like application performance monitoring (APM) can be a real challenge.

This article originally appeared on chaossearch.io. To read the full article, click here.

What is AIOps (Artificial Intelligence for IT Operations)

The volume of data that IT systems generate nowadays is overwhelming, and without intelligent monitoring and analysis tools, it can result in missed opportunities, alerts, and expensive downtime. However, with the advent of Machine Learning and Big Data, a new category of IT operations tool has emerged called AIOps.

AIOps can be defined as the practical application of Artificial Intelligence to augment, support, and automate IT processes. It leverages Machine Learning, Natural Language Processing, and Analytics to monitor and analyze complex real-time data, helping teams quickly detect and resolve issues.

With AIOps, Ops teams can tame the vast complexity and volume of data generated by their modern IT environments to prevent outages, maintain uptime and achieve continuous service assurance. AIOps enables organizations to operate at speed demanded by modern businesses and deliver a great user experience.

The following are the top five reasons why the necessity of AIOps is increasing.

Analytics has become challenging due to the proliferation of monitoring tools.

Using disparate monitoring tools makes achieving complete visibility across an enterprise service or application difficult. It also makes it nearly impossible to correlate and analyze multiple application performance metrics.

AIOps can help deliver a primary, single pane of analysis across all domains, which will help organizations to ensure an optimal customer experience. AIOps helps reduce false positives, build alert correlation and identify root causes without having the tech go to multiple tools.

The sheer volume of alerts is becoming unmanageable.

With thousands of alerts per month on average that have to be proactively dealt with, it’s no wonder AI and Machine Learning are now becoming necessary. AIOps can help reduce the impact of issues like detecting issues, collaboration across teams, and alert correlation across all tools by reducing downtime and time spent on analyzing these alerts.

Predictive analysis is required to deliver a superior user experience.

Every business today is one lousy user experience away from a lost customer. Considering this, the premium that companies place on ensuring an exceptional user experience is not surprising. Delivering a great user experience with predictive analytics is among the most crucial business outcomes, and as such, predictive analytics is the most sought-after AIOps capability.

Enormous expected benefits of AIOps

Numerous IT professionals believe that AIOps will deliver actionable insights to help automate and enhance overall IT operations functions. They also think AIOps will increase efficiency, faster remediation, better user experience, and reduce operational complexity. This is primarily achieved through AIOps’ automation capabilities, including automating data analytics and predictive insights across the entire toolchain.

This article originally appeared on markettechpost.com. To read the full article, click here.

Nastel will be demonstrating its new i2M AIOps release at the Gartner IT Infrastructure, Operations & Cloud Strategies Conference in Las Vegas on Dec 6–8, 2022

Infrastructure and operations leaders have a critical role to play in transforming and enhancing business capabilities, focusing on enhancing customer experiences and developing staff and skills while building and operating resilient, sustainable, secure, and scalable platforms and systems. The Gartner conference is the place to find objective guidance and insights on these topics and create an effective pathway to the future.

Nastel will be exhibiting the latest release of its platform for managing and monitoring integration-centric applications, and the surrounding infrastructure in dynamic hybrid cloud, and legacy environments as well providing business and IT insight from the transactions themselves.

Hari Mohanan, Nastel Vice President of Global Sales said “We’re very excited to be back at the Gartner conference and shining the spotlight on the importance of integration infrastructure management. Integration technologies are the engine of the enterprise. Business is all about transactions; money and products being passed between different companies and people. If there’s a failure in the integration infrastructure, there’s a failure in the company itself.”

“IT environments are changing on a daily basis” explains Albert Mavashev, CTO of Nastel. “Infrastructures dynamically scale and migrate using cloud and containers. The monitoring and management software needs to keep up with that. We’re looking forward to discussing these issues at the show.”

Nastel Technologies will be available in the Infrastructure Discovery, Monitoring and Management area of the exhibition hall at the conference.

Related Resources:

• Conference Details

• Nastel’s Integration Infrastructure Management

Jennifer Mavashev

Nastel Technologies, Inc.

+1 516-456-2015

jmavashev@nastel.com

What is AIOps (artificial intelligence for IT operations)?

Artificial intelligence for IT operations (AIOps) is an umbrella term for the use of big data analytics, machine learning (ML) and other artificial intelligence (AI) technologies to automate the identification and resolution of common IT issues. The systems, services and applications in a large enterprise produce immense volumes of log and performance data. AIOps uses this data to monitor assets and gain visibility into dependencies within and outside of IT systems.

An AIOps platform should bring three capabilities to the enterprise:

1. Automate routine practices.

Routine practices include user requests, as well as non-critical IT system alerts. For example, AIOps can enable a help desk system to process and fulfill a user request to provision a resource automatically. AIOps platforms can also evaluate an alert and determine that it does not require action because the relevant metrics and supporting data available are within normal parameters.

2. Recognize serious issues faster and with greater accuracy than humans.

IT professionals might address a known malware event on a noncritical system but ignore an unusual download or process starting on a critical server because they are not watching for this threat. AIOps addresses this scenario differently, prioritizing the event on the critical system as a possible attack or infection because the behavior is out of the norm, and deprioritizing the known malware event by running an antimalware function.

3. Streamline the interactions between data center groups and teams.

AIOps provides each functional IT group with relevant data and perspectives. Without AI-enabled operations, teams must share, parse and process information by meeting or manually sending around data. AIOps should learn what analysis and monitoring data to show each group or team from the large pool of resource metrics.

Use Cases

AIOps is generally used in companies that use DevOps or cloud computing and in large, complex enterprises. AIOps aids teams that use a DevOps model by giving development teams additional insight into their IT environment, which then gives the operations teams more visibility into changes in production. AIOps also removes a lot of risks involved in hybrid cloud platforms by aiding operators across their IT infrastructure. In many cases, AIOps can help any large company that has an extensive IT environment. Being able to automate processes, recognize problems in an IT environment earlier and aid in smoothing communications between teams will help a majority of large companies with extensive or complicated IT environments.

This article originally appeared on techtarget.com. To read the full article, click here.

The Prometheus and Grafana combination is rapidly becoming ubiquitous in the world of IT monitoring. There are many good reasons for this. They are free open source toolkits, so easy to get hold of and try out and so there is a lot of crowd sourced help available online to getting started, and this even includes documentation from the developers of middleware such as IBM MQ and RabbitMQ.

At Nastel, where we focus on supporting integration infrastructure for leading edge enterprise customers, we are finding that many of our customers have made policy decisions of using Prometheus and Grafana across the board for their monitoring. However, they are finding that it’s not sufficient in all situations.

Business Agility and Improving Time to Market

Speed is King. The business is constantly requesting new and updated applications and architecture, driven by changes in customer needs, competition, and innovation. Application developers must not be the blocker to business. We need business changes at the speed of life, not at the speed of software development.

For Black Friday, a large ecommerce site that typically has 3,000 concurrent visitors suddenly had to handle 1 million in a day! How can they handle 300 times as many visitors? If they can’t cope then this could change from black Friday to a very red one with a very public outage. A high-profile loss with serious reputational damage.

Evolving IT

IT is constantly evolving. Most companies moved their IT to the agile development methodology and then they added DevOps with automation, continuous integration, continuous deployment, and constant validation against the ever-changing requirements.

With agile, companies reduced application development time from two years to six months. With DevOps it went down to a month, and now adding in cloud, companies like Netflix and Eli Lilly can get from requirements, to code, to test, to production in an hour. They’ve evolved their architectures from monolithic applications to service oriented architectures to multi-cloud, containers and microservices. Microservices can quickly get pushed out by DevOps, moved between clouds, they can be ephemeral and serverless and containerized. They use hyperscale clouds, so named because they have the elasticity to grow and shrink based on these dynamic needs. They have stretch clusters and cloud bursting so that burstable applications can extend from on-premise into a large temporary cloud environment with the necessary resources for Black Friday and then scale down again.

So now a single business transaction can flow across many different services, hosted on different servers in different countries, sometimes managed by different companies. The microservices are continuously getting updated, augmented, and migrated in real time. The topology of the application can change significantly on a daily basis.

Supporting Agile IT

So how is all this IT supported and how do we know if it is working? How do we get alerted if there is a break down in the transaction flow or if a message gets lost? How can you monitor an application that was spun up or moved for such a short period of time?

By building Grafana dashboards on top of the Prometheus platform, a monitoring, visualization and alerting solution can be constructed which provides visibility of the environment. The question is whether this can be built and adjusted fast enough to keep up with the everchanging demands of the business and IT.

Nastel’s Integration Infrastructure Platform

Nastel’s Integration Infrastructure Management platform addresses this. Its XRay component dynamically builds a topology view based on the real data that flows through the distributed application. It gives end to end observability of the true behavior of your application in a constantly changing agile world. It highlights problem transactions based on anomalies, and enables you to drill down to those transactions, carry out root cause analytics and perform remediation. The Nastel solution receives data from across the IT estate, including from Prometheus, and allows rapid creation and modification of visualizations and alerts in a single tool.

Furthermore, the Nastel technology adds in almost 30 years experience of supporting large production middleware environments. It has deep granular knowledge of the technologies and issues, and uses learned and derived data to provide AIOps and Observability, in addition to traditional event based monitoring.

Enhancing your existing infrastructure

Companies use Nastel’s Integration infrastructure Management (i2M) solution to enhance their existing tools. We take a proactive SRE approach based on an in-depth understanding of the middleware and monitoring history to prevent the outage altogether by monitoring key derived indicators such as:

- Latency – time to service a request.

- Traffic – demand placed on system

- Error Rate – rate of Failed Requests

- Saturation – which resources are most constraint

Prometheus & Grafana can give high level monitoring of a static environment, but they are two separate products and time is money. Ease of fixing issues without war rooms and handovers from support back to development is time critical. Do you have enough diagnostic data, skills or the tooling? Is your monitoring provider able to support you on the phone with middleware expertise throughout the outage? Just how important is your integration infrastructure? The Nastel Platform includes Nastel Navigator to quickly fix the problem too.

As business requirements change it is crucial to be able to change the dashboards and alerts in line with this. Nastel XRay is built with this as a core focus. With its time series database, the dashboard and alerting are all integrated together. The dashboards are dynamically created and change automatically as the infrastructure and message flows change. This requires minimal time for set-up, with monitoring not being a blocker to business. Rather than asking for a screenshot of a monitoring environment in use, ask for a demo of how long it takes to build a dashboard.

Nastel is the leader in Integration Infrastructure Management (i2M). You can read how a leading retailer used Nastel software to manage their transactions here and you can hear their service automation leader discussing how he used Nastel software to manage changing thresholds for peak periods here.

Is your IT environment evolving like this? What are your experiences with Prometheus and Grafana? Please leave a comment below or contact me directly and let’s discuss it.

Nastel Technologies – Intelligence from Integration

Leading Global Provider of Messaging Middleware-Centric Performance & Transaction Management

Banking is about financial transactions. These are executed by sending payments and instructions over middleware. If you control the middleware, then you control the business. If the middleware fails, then the company fails. If you can see and analyze the transactions going through the middleware, you can see the business itself. And if you have real-time analytics of that data and it’s automatically actioned, then you can innovate and accelerate the company’s development. The Nastel i2M (integration infrastructure management) platform enables configuration and reconfiguration in real-time, allowing exploration of streaming data as it comes in, enabling business agility and innovation.

Founded in 1994 by CEO David Mavashev and headquartered in Plainview, NY, Nastel Technologies are the global experts at exploiting messaging middleware environments and the contents of messages to improve the business and technical understanding of complex enterprise application stacks. They achieve this by managing the messaging middleware, monitoring entire application stacks end-to-end, providing proactive alerts, tracing and tracking transactions, visualizing, analyzing, and reporting on machine data in meaningful ways, simplifying root cause analysis, and providing data to support business decisions.

Nastel’s i2M Platform provides advanced management capabilities to the messaging middleware administrators. Integration Infrastructure Management is the supporting of the multi-middleware environment from the point of view of configuration, performance & availability monitoring & alerting. It provides insight into business transactions using message tracking, analytics, and Management Information (MI) for decision support and capacity planning of the middleware-centric applications, the technology that connects them and the business data itself.

“The solution helps the business as a whole. Business users and other personnel including middleware admins, application developers, operations, and support groups are amongst the solution’s users and beneficiaries,” says David. He is hyper-focused on helping, and understanding the needs of, customers. Nastel’s i2M platform helps the messaging admins manage their environment at scale, with security, repeatability, speed, reduced risk, and minimal need to understand the underlying operating systems and environments such as mainframe, Windows, virtual machines, cloud, and containers. Similarly, it allows them to use a single tool to manage multiple messaging middleware products such as IBM MQ, Kafka, Solace, and TIBCO without needing a detailed understanding of each technology.

Typically, companies have governance processes requiring that only the middleware team change and administer the integration infrastructure. An administration team of around ten people usually handles requests from hundreds and, in large organizations, from thousands of support and development personnel. This takes time, so the middleware team is seen as a bottleneck to business. The business is delayed in getting new services and products out to market, and it has competitive, reputational, financial, and operational risks, potentially causing a loss in market share.

“Our self-service solution with its granular security model, gives access to thousands of users and empowers them to control their part of the middleware configuration for development or QA purposes,” explains David. “They can only change the relevant objects and are able to “view but not change” or “create but not delete” for a very strict set of objects based on rules or naming conventions. This allows them to proceed with their work and for the middleware team to work on higher-value activities.”

It is pertinent to mention, 7 of the top 10 banks in the world use Nastel to support their business. For instance, one of the most prominent American banks recently replaced BMC with the Nastel solution because their auditors said they had a Key Person Dependency. Their middleware monitoring needed so much configuration that only one person understood it. If that person left the company, they’d be without support and maintenance for their monitoring and hence couldn’t release new business applications. The customer said, “The biggest thing that helped you guys is the flexibility of the company and openness to be a partner rather than just a vendor. We could come to you and say, ‘look this is our need or requirement’ and you did everything you needed to meet those requirements.” Now the bank will never again be in a situation where it takes two weeks to locate a message in their system. The Nastel solution had fully replaced BMC for more than 6000 users and 800 distributed applications, and IBM z/OS in 11 months. It paid for itself with the savings from reducing the ongoing Tivoli license costs.

Nastel has committed significant investment for the near future to support the evolving needs of its existing customers by keeping the widest and growing range of the latest and legacy integration technologies and the ever-changing banking business requirements and is also increasing its presence in Europe. “We are also broadening our range to support the entire software development lifecycle with a particular investment in automation, APIs and performance benchmarking and working on various blockchain-based innovations.”

This article originally appeared in MyTechMag Banking Tech Special Edition September 2022. To read the full magazine, click here.

Introduction

Service automation is the process of automating processes, events, tasks, and business functions. It offers multidimensional visibility of the business, which helps you streamline the business process.

It integrates the domain functionality tools with various layers of automation within a unified interface or workflows.

Benefits Of Service Automation

There are several benefits of service automation that you should be aware of while developing your business:-

1. It Reduces The Chances Of Human Error

Whenever people do monotonous tasks over and over again, then it increases the chances of human error. Likewise, whenever the work order is not updated accurately, it increases the chances of human error.

The chances of human error will reduce when you use service automation. This is because machines will not feel monotonous at times while working continuously for hours.

Using AI will improve the chances of getting the job done on time and with ease. However, you must not make things work in the wrong way. The chances of human error will be reduced considerably while using AI.

2. Spend & Reporting Insights Offer Better Visibility

It offers current and predictive views of your business operation. Visual-based analytics will provide insights into business operations’ contemporary, historical, and predictive perspectives. You have to understand the facts to reach your goals.

Once you get accurate insights into your business, things can turn out easier. You can make the right decisions for your business. Proper planning will help you to meet your goals within a specific time frame.

Your financial management spending will become easier due to it. Better visibility of your brand will be possible once you take the help of AI.

3. Provides Compliance

Compliance management helps you to address operational risk.

You can view the compliance records. It will provide accurate data to your company’s managers about activities over time. The more you can make the right choices, the better you can reach your goals.

4. Enhances Operational Efficiency

Service operation will help you in making and streamlining the process. It will increase the operational efficiency of your business to a great extent. The work order management will become smooth.

Service automation will streamline the entire process of the work order as per the priority. It will help you to decide which work will require your attention first. The whole process will become smooth and effective.

It focuses on the tracks which truly deserve your attention to improve and develop your business in the right direction and help you to meet your objectives.

5. Lowers Cost

Service automation will lower your costs in your organization and reduce the running costs of your system. The AI can easily perform administrative tasks.

You may have fewer resources and a leaner and more efficient team. It can make things work for you in the best way to meet your goals within a specific time frame and eliminate unnecessary cost.

6. Ensures Brand Preservation

The brand appearance and the brand preservation improve as you reach your goals.

For the retail sector, it directly affects the process of your shopping experience.

7. Improves The Rate Of Customer Satisfaction

When you make use of service automation, then the level of your customer satisfaction increases. You must ensure that you reduce the chances of complications at specific points in time.

Throughout the entire process, your customers will get the correct service they want from you on time.

Once the satisfaction level of your customer increases for your brand, it can boost the chances of your sales within a specific time.

Final Take Away

Hence, these are some of the crucial facts which you must consider when you want to develop service automation. Proper planning can help you reach your goals within a specific time.

Service automation is an important part of developing your business correctly. Plan out things to meet your objectives effectively.

Effective planning will help you to meet your objectives correctly. Service automation will reduce the chances of error, and it will increase the chances of efficiency.

How does this apply to Nastel?

In Integration Infrastructure Management (i2M), Nastel Navigator is used for automated deployment, migration, and remediation of middleware and can be used in conjunction with CI/CD pipelines. Nastel AutoPilot identifies issues in middleware performance and the behaviour of middleware-centric applications and automatically fixes them. AutoPilot and Navigator are components of the Nastel i2M Platform, providing service automation for middleware environments including IBM MQ, Apache Kafka, Solace PubSub+, TIBCO EMS and many more.

Author Bio:

Charles Simon is a vibrant, professional blogger and writer. He graduated from the University of California, Berkeley, in business management. He is a business owner by profession, but he is a passionate writer by heart. Now Charles is the owner and co-founder of searchenginemagazine.com, dreamandtravel.com, tourandtravelblog.com, realwealthbusiness.com, financeteam.net, onlinehealthmedia.com, and followthefashion.org.

Introduction

Both Artificial Intelligence and Machine Learning are complex things. There are so many things to know. These days human life has changed because of AI. So, before understanding the differences, let’s know about different factors.

If I have to say the difference in simple words. AI helps us solve various tasks; on the other hand, Machine Learning is the subset of AI’s specific tasks. So, you can say that all Machine Learning is AI, but all AI is not machine learning.

As a python developer, I will tell you different factors about these two things. AI and Machine Learning are connected with one another. Here I will discuss various aspects of these two things. I hope this article will help you to understand and clarify any confusion.

Artificial Intelligence

In simple words, there are computer programs that mimic human functions, that is, Artificial Intelligence. Examples of these functions are problem-solving and learning through errors and experience. A computer system takes help from math and logic to duplicate reasoning. We, humans, learn from knowledge, information, and data; after that, we make decisions. Computers do the same thing with the help of AI.

Machine Learning

There are so many different thoughts amongst people about machine learning. But, machine learning is an application of AI. Computers use mathematical models for learning, and they need no human instructions. Machine learning is the whole process through which computers do these things. It is a process that helps computers to learn and improve new things, and this learning process is based on experience.

Confusion About AI And Machine Learning

There are people who think that these two things are the same, and there are people who think that these two things are different. But in reality, these two things are connected. They are not the same, but when we say machine learning, there is an AI behind it. In simple words, machine learning is a part of the AI, or you can say a subset.

Connection Between AI And Machine Learning

Computers that use AI act like humans and do all their tasks independently. Through learning and experiencing, the computer develops its intelligence; in simple terms, machine learning is the process. Every business can use AI and machine learning, from multinational companies to grocery stores.

It is a process to educate the computers to work like a human brain. We, humans, gather experience with time and learning. A computer with an AI does the same process, and the process is called machine learning. People think these two things are different, but these two things work closely to solve critical problems.

The Process of AI And Machine Learning

You must be wondering how these two things work together to get a solution. This process will help you to see how these work together.

- First Step – Machine learning and other techniques are used to build an AI system

- Second Step – Machine learning models are developed by analyzing the data patterns

- Third Step – Data scientists evaluate the patterns in the information and data. Machine learning is based on this information

- Fourth Step – Repetition of the process helps machine learning to get more accurate results. And finally, the tasks are done

Capabilities

These days every company is adapting machine learning to their business. With the help of AI and machine learning, there are more opportunities. But there are a lot of new ways to help different agencies. Now coming to the point, there are a few examples of these two things.

With these examples, you will understand how AI and machine learning are helping humans to make their life easy and fast.

Predictive Analysis

There is a need to predict many things before the actual incident. For example, suppose a marketing company wants to know about the consumers and their buying habits. The capability to predict future things early helps the companies to understand different behavioral patterns. Machine learning uses cause and effect and goes through various information and data.

Recommendation Engines

Machine learning and AI help companies identify the best things for their company. These two things recommend the best things through data analysis.

Speech Recognition

AI and machine learning do many things. One of these things is speech recognition. Everyone uses this process in their everyday life. There are natural language settings, and also spoken language is there. It also helps in written language.

Video And Image Processing

We set our smartphones to identify faces, images, and videos. For example, I set my screen lock to face recognition. This capability helps the users to specify different images and videos, and later, users search for these items online.

Sentiment Analysis

This capability will make an impression on our minds. Through this capability, computers analyze attitudes and approaches like positive, negative, and neutral. Through voice or written expressions, AI and machine learning use this capability.

Benefits

AI has its own benefits, and machine learning has its own benefits. But when these two things work simultaneously, there are a lot of benefits that we can get.

Data Input

There is a need to discover significant information for different businesses. AI and machine learning provides a wide range of data sources.

Operational Efficiency

There are so many things that are operated automatically. Examples of these automated processes are in factories, data entry, etc. These two things cut costs and let the companies use resources in other areas. Also, it enables companies to save time and resources.

Better And Faster

AI helps to understand human errors and provides more efficiency and data integrity. It allows companies to work well and fast. Decisions are based on AI and machine learning.

To Conclude

I hope now you have a better understanding of AI and machine learning. Both these two things are connected and work together to achieve better results.

If you have any queries, feel free to ask in the comment section below.

Thank you.

Harmaini Zones is a vibrant, professional blogger and writer. She graduated from the University of California, Berkeley, in business management. She is a business owner by profession, but she is a passionate writer by heart. She is the owner of Getmeseen.net, toppreference.com, bigjarnews.com, okeymagazine.com, globalbusinessdiary.com, smallbusinessjournals.com, moneyoutlined.com, theglobalmagazine.org,